Why Mark Zuckerberg doesn’t realize Facebook is evil

Facebook whistleblower Frances Haugen testified in Congress yesterday. And Mark Zuckerberg was mystified. He was surely thinking, “Can’t they see that we’re out to help humanity?”

Today I deconstruct his sincere statement to employees after all the negative news about Facebook in the past month. If you read this critically and with the right perspective, I believe it reveals everything about how Facebook thinks, and why it cannot understand all the criticism.

Two insights explain the the difference between Zuckerberg’s world view and the view of his critics like me.

- Facebook is not dedicated to money over everything else. This is a common misconception, but if you look at Facebook’s major strategic decisions, they are not about money. They are about maintaining the integrity of the algorithm. If the algorithm prevails, Facebook prevails (and, incidentally, is profitable). Zuckerberg believes that every bit of human judgment that the system requires is a flaw.

- By definition, the algorithm connects and connection is good. Therefore, Facebook is doing good. Zuckerberg believes that the bad things that the algorithm causes or allows are just minor side effects of the overall goodness of the algorithm. He is mystified why everyone must focus on the marginal badness rather than the overall awesomeness of the algorithm and its power to connect.

Now you can understand Zuckerberg’s statement

In order for Facebook to continue to thrive, its employees must believe in the two insights I’ve just described. So as you read Zuckerberg’s Facebook post, look for the justification, and you’ll see why he sees only the best when the world sees the worst.

In the passages below, the quotes are verbatim from Zuckerberg’s post and the commentary that follows is mine.

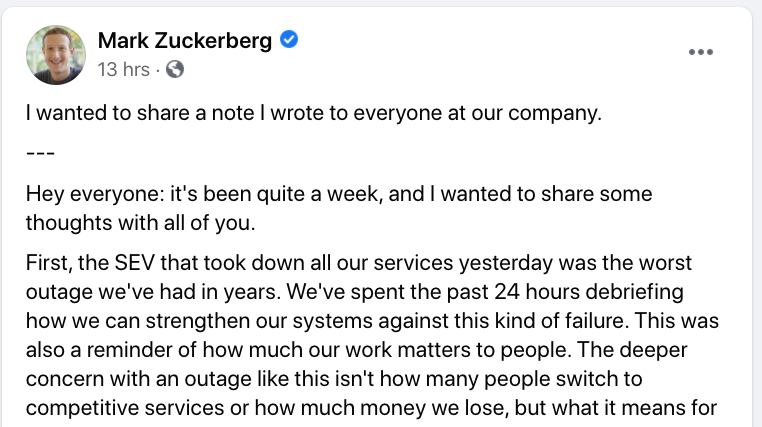

Hey everyone: it’s been quite a week, and I wanted to share some thoughts with all of you.

First, the SEV that took down all our services yesterday was the worst outage we’ve had in years. We’ve spent the past 24 hours debriefing how we can strengthen our systems against this kind of failure. This was also a reminder of how much our work matters to people. The deeper concern with an outage like this isn’t how many people switch to competitive services or how much money we lose, but what it means for the people who rely on our services to communicate with loved ones, run their businesses, or support their communities.

The cause of the Facebook extended outage was that Facebook uses its own systems to run everything at the company (even the key cards that open the doors), and when Facebook unintentionally nuked its internet presence, everything stopped working. This is a colossally arrogant way to run a company, but it is consistent with the idea that what Facebook creates is the best possible way to do everything. That’s why Zuckerberg couches his description of this major, extended, self-inflicted outage of all of its systems in terms of how the world was deprived of the wonderfulness of the algorithm for a little while, rather than taking responsibility for the stupidity of its single-point-of-failure security system.

Second, now that today’s testimony is over, I wanted to reflect on the public debate we’re in. I’m sure many of you have found the recent coverage hard to read because it just doesn’t reflect the company we know. We care deeply about issues like safety, well-being and mental health. It’s difficult to see coverage that misrepresents our work and our motives. At the most basic level, I think most of us just don’t recognize the false picture of the company that is being painted.

If you believe you are doing good with your algorithm, then criticism of the harm it causes doesn’t ring true. “Most of us don’t recognize the false picture” = “I don’t see the problems.”

Many of the claims don’t make any sense. If we wanted to ignore research, why would we create an industry-leading research program to understand these important issues in the first place? If we didn’t care about fighting harmful content, then why would we employ so many more people dedicated to this than any other company in our space — even ones larger than us? If we wanted to hide our results, why would we have established an industry-leading standard for transparency and reporting on what we’re doing? And if social media were as responsible for polarizing society as some people claim, then why are we seeing polarization increase in the US while it stays flat or declines in many countries with just as heavy use of social media around the world?

Let’s take these one at a time.

- Facebook has a major research program, but it hides the results of that research. That research is what Frances Haugen revealed, and what the Wall Street Journal wrote about. It’s not that they don’t research these issues, but that they conceal any research results that reflect badly on the company.

- The fact that Facebook employs a large moderation force isn’t the point. The point is that the moderation force is inadequate to the task, which is why 95% of prohibited content slips through. And the moderation force is outsourced, because it reflects a human flaw in the algorithm that Facebook doesn’t want to be a core part of the company.

- The “industry-leading standard for transparency” apparently also include cutting off independent researchers from Facebook data and sending them skewed results.

- The perfect setup for social-media-fueled polarization is a democratic two-party system. Other parts of the world are either totalitarian or multi-party parliamentary systems, not pure us-vs.-them politics. This is why Facebook’s algorithm makes things much worse in the U.S. than elsewhere.

At the heart of these accusations is this idea that we prioritize profit over safety and well-being. That’s just not true. For example, one move that has been called into question is when we introduced the Meaningful Social Interactions change to News Feed. This change showed fewer viral videos and more content from friends and family — which we did knowing it would mean people spent less time on Facebook, but that research suggested it was the right thing for people’s well-being. Is that something a company focused on profits over people would do?

No, but it is exactly what a company focused on the supremacy and purity of the algorithm would do — because it sees all problems as just flaw in the algorithm that can be cured with tweaks to the algorithm.

The argument that we deliberately push content that makes people angry for profit is deeply illogical. We make money from ads, and advertisers consistently tell us they don’t want their ads next to harmful or angry content. And I don’t know any tech company that sets out to build products that make people angry or depressed. The moral, business and product incentives all point in the opposite direction.

I don’t believe Facebook’s management deliberately inflames anger. I believe the algorithm rewards engagement (clicks, likes, shares) and anger creates engagement. The point is not that Facebook rewards anger, it is that it fails to do anything to slow it down so the algorithm inflames it. And note that if 90% of the content is normal sharing of puppies and grandchildren and funny memes, and 10% is angry politics, that’s still way more anger than anyone would encounter in daily life — especially if Facebook’s algorithm helps to spread it.

But of everything published, I’m particularly focused on the questions raised about our work with kids. I’ve spent a lot of time reflecting on the kinds of experiences I want my kids and others to have online, and it’s very important to me that everything we build is safe and good for kids.

Child safety requires intensive moderation. Intensive moderation interferes with the algorithm. Therefore, even if child safety is a problem, Facebook is not going to realistically invest in solving that problem. It’s not that they set out to hurt kids. The kids are just collateral damage of the behavior of the algorithm.

The reality is that young people use technology. Think about how many school-age kids have phones. Rather than ignoring this, technology companies should build experiences that meet their needs while also keeping them safe. We’re deeply committed to doing industry-leading work in this area. A good example of this work is Messenger Kids, which is widely recognized as better and safer than alternatives.

If you create a toxic machine and then try your best to contain the effluent, are you good, or evil?

We’ve also worked on bringing this kind of age-appropriate experience with parental controls for Instagram too. But given all the questions about whether this would actually be better for kids, we’ve paused that project to take more time to engage with experts and make sure anything we do would be helpful.

In other words, Facebook can’t continue with its normal operations with kids under this much scrutiny. What does that tell you?

Like many of you, I found it difficult to read the mischaracterization of the research into how Instagram affects young people. As we wrote in our Newsroom post explaining this: “The research actually demonstrated that many teens we heard from feel that using Instagram helps them when they are struggling with the kinds of hard moments and issues teenagers have always faced. In fact, in 11 of 12 areas on the slide referenced by the Journal — including serious areas like loneliness, anxiety, sadness and eating issues — more teenage girls who said they struggled with that issue also said Instagram made those difficult times better rather than worse.”

But when it comes to young people’s health or well-being, every negative experience matters. It is incredibly sad to think of a young person in a moment of distress who, instead of being comforted, has their experience made worse. We have worked for years on industry-leading efforts to help people in these moments and I’m proud of the work we’ve done. We constantly use our research to improve this work further.

“We only harm some teenagers sometimes, and we help others,” is a very weak defense. If this were true and defensible, why hide and ignore the research? Because, by definition, the algorithm is good and the side-effects are worth it.

Similar to balancing other social issues, I don’t believe private companies should make all of the decisions on their own. That’s why we have advocated for updated internet regulations for several years now. I have testified in Congress multiple times and asked them to update these regulations. I’ve written op-eds outlining the areas of regulation we think are most important related to elections, harmful content, privacy, and competition.

We’re committed to doing the best work we can, but at some level the right body to assess tradeoffs between social equities is our democratically elected Congress.

If Facebook is so sure it needs to be regulated, why doesn’t it take on a voluntary set of regulations? “Please give us rules to stop us from hurting people” is a pusillanimous position for the dominant social network company. The fact that Facebook is not leading in the proposal of rational regulations is a clear sign that it believes the algorithm is more important than the harm it causes. It won’t stop until we stop it.

For example, what is the right age for teens to be able to use internet services? How should internet services verify people’s ages? And how should companies balance teens’ privacy while giving parents visibility into their activity?

If we’re going to have an informed conversation about the effects of social media on young people, it’s important to start with a full picture. We’re committed to doing more research ourselves and making more research publicly available.

Facebook’s job as a market leader is not just to ask these questions, but to answer them.

That said, I’m worried about the incentives that are being set here. We have an industry-leading research program so that we can identify important issues and work on them. It’s disheartening to see that work taken out of context and used to construct a false narrative that we don’t care. If we attack organizations making an effort to study their impact on the world, we’re effectively sending the message that it’s safer not to look at all, in case you find something that could be held against you. That’s the conclusion other companies seem to have reached, and I think that leads to a place that would be far worse for society. Even though it might be easier for us to follow that path, we’re going to keep doing research because it’s the right thing to do.

Actually, if Facebook cared about this from the viewpoint of the public good, it would fund independent researchers to work on the problem and then publish their results openly, not hide what looks bad.

What is the purpose of Facebook research? It is to determine what is best for the algorithm. It is to determine how best to balance the needs of the algorithm with the harm that it does. Once you recognize that, you realize why Facebook wants to do a lot of research for itself and publish only what makes it look good.

I know it’s frustrating to see the good work we do get mischaracterized, especially for those of you who are making important contributions across safety, integrity, research and product. But I believe that over the long term if we keep trying to do what’s right and delivering experiences that improve people’s lives, it will be better for our community and our business. I’ve asked leaders across the company to do deep dives on our work across many areas over the next few days so you can see everything that we’re doing to get there.

Try reading this as, “We do positive things and also cause harm, why does everyone talk about the harm?” Then it makes sense. But if you create a lifesaving medication that comforts 10,000,000 people and kills 100,000 we still write about the danger. “We do good” is not a sufficient justification for “Stop focusing on the harm that we do.”

When I reflect on our work, I think about the real impact we have on the world — the people who can now stay in touch with their loved ones, create opportunities to support themselves, and find community. This is why billions of people love our products. I’m proud of everything we do to keep building the best social products in the world and grateful to all of you for the work you do here every day.

All hail the algorithm. Pay no attention to the hate we spread, the misinformation, the teens tormented by body image issues, the preferential treatment for celebrities, the violence, the divisiveness, the doxxing, the people who believed vaccines were harmful because of what they read on Facebook and then died of COVID, stop paying attention to all that trivial stuff. Because the algorithm is good. It will save humanity.

Hold Facebook responsible. Crush facebook.

One of your best posts, Josh. Zuck is the epitome of tone deaf. It’s dangerous that he’s effectively unaccountable. HIs board is effectual.

I’m betting that Sandberg leaves within three months.

Agree with Phil. This is amazing — point-by-point refutation of the entire statement. Should be read, shared and reread.

“anger creates engagement. The point is not that Facebook rewards anger, it is that it fails to do anything to slow it down so the algorithm inflames it. And note that if 90% of the content is normal sharing of puppies and grandchildren and funny memes, and 10% is angry politics, that’s still way more anger than anyone would encounter in daily life”

The solution is not “Crushing”, if the above is true.

But if that is what we want to go with – where the issue is allowing anger and untruth to spread, we may have another target to Crush – AT&T!

https://www.reuters.com/investigates/special-report/usa-oneamerica-att/

Incredibly insightful post Josh. Were any of us really surprised by either her “revelations” or Zuck’s response? Algorithms don’t feel, people do. But people write them and have the power to change them. Put the Plumbing ahead of the good of the People and this is what you get. The sad part is we continue to let them grade their own homework. Broadcast Network content has to live up to licensed standards and practices, and the advertising industry it runs on established its own means of self regulation called the NARB long ago. Both are held to standards and practices. Since the digital walled gardens created their own “marketplace” void of any standards, practices or regulations, we have allowed them the freedom to grade their own homework and advertisers simply believe, while the public gets their echo chamber. They deserve the regulations that are coming to them. Sadly, they will likely come in the shape of a pitchfork.

I’ve posted a comment pointing to your blog post under this Washington Post column by Jennifer Rubin: https://www.washingtonpost.com/opinions/2021/10/06/what-facebooks-whistleblower-achieved/

I really like this piece. I have been very skeptical of Facebook ever since I first used it as a budding technical writer and have only seen it both dumb down internet users more and more while growing so powerful that it just announced what is to be one of the most powerful computers on earth. From so many perspectives it is the epidomy of a machine built for greed and control at a higher level where the simplest user interface elements like a reasonable search engine are obfuscated because that would actually make it a useful tool instead of a propaganda machine to addict you. Look, I know the people who built this thing are not SS officers, but they serve a twisted God that I want no part of and will do my part to take it down in my humble way.