Is Facebook responsible for hosting a murder broadcast?

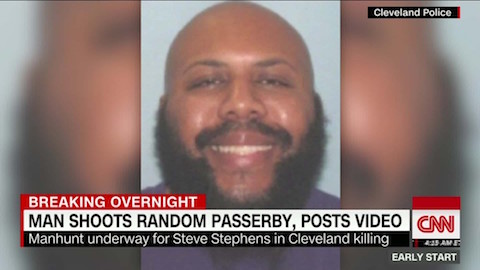

Facebook user Steve Stephens used Facebook on Sunday to build an audience for murder, posting videos of his plan to shoot somebody, the shooting itself, and his confession. Facebook explained the changes it will make in reviewing videos like this, but avoiding taking responsibility or apologizing. In the end, how much fault lies with Facebook?

While Facebook’s statement is clear, the company does not apologize

Facebook VP Justin Osofsky posted a 450-word statement about the shooting on Monday. It’s a model of clarity. Let’s take it apart. I’ve highlighted weasel words and added analysis.

April 17, 2017

Community Standards and Reporting

By Justin Osofsky, VP, Global Operations

On Sunday morning, a man in Cleveland posted a video of himself announcing his intent to commit murder, then two minutes later posted another video of himself shooting and killing an elderly man. A few minutes after that, he went live, confessing to the murder. It was a horrific crime — one that has no place on Facebook, and goes against our policies and everything we stand for.

Analysis: The lede states the facts in one sentence, and then Facebook’s clear opposition to videos like this in a second. By using only one vague adjective, “horrific,” the statement avoids the loss of credibility that comes from repeated weasel words. My one quibble is with the title, which doesn’t make it clear that this post refers to video of a murder. Notice also that while Facebook states clear opposition, it does not apologize.

As a result of this terrible series of events, we are reviewing our reporting flows to be sure people can report videos and other material that violates our standards as easily and quickly as possible. In this case, we did not receive a report about the first video, and we only received a report about the second video — containing the shooting — more than an hour and 45 minutes after it was posted. We received reports about the third video, containing the man’s live confession, only after it had ended.

We disabled the suspect’s account within 23 minutes of receiving the first report about the murder video, and two hours after receiving a report of any kind. But we know we need to do better.

Analysis: Is this being defensive or stating the facts? Again, Facebook does not take responsibility for inflaming a murder, only for the speed with which it takes down the video, which it pledges to improve. The lack of weasel words in the statement keeps it from seeming defensive.

In addition to improving our reporting flows, we are constantly exploring ways that new technologies can help us make sure Facebook is a safe environment. Artificial intelligence, for example, plays an important part in this work, helping us prevent the videos from being reshared in their entirety. (People are still able to share portions of the videos in order to condemn them or for public awareness, as many news outlets are doing in reporting the story online and on television). We are also working on improving our review processes. Currently, thousands of people around the world review the millions of items that are reported to us every week in more than 40 languages. We prioritize reports with serious safety implications for our community, and are working on making that review process go even faster.

Analysis: While this digression about technology isn’t as clear as the rest of the statement, it does provide some insight into the scale of the problem. Weasel words like “constantly,” “important,” and “millions” make that this paragraph appear more defensive than the rest of the statement.

Keeping our global community safe is an important part of our mission. We are grateful to everyone who reported these videos and other offensive content to us, and to those who are helping us keep Facebook safe every day.

Analysis: Actually, it’s not safe. That’s the problem. This ties a neat bow on things, but they’re not so neatly tied up as it might appear.

Timeline of Events

11:09AM PDT — First video, of intent to murder, uploaded. Not reported to Facebook.

11:11AM PDT — Second video, of shooting, uploaded.

11:22AM PDT — Suspect confesses to murder while using Live, is live for 5 minutes.

11:27AM PDT — Live ends, and Live video is first reported shortly after.

12:59PM PDT — Video of shooting is first reported.

1:22PM PDT — Suspect’s account disabled; all videos no longer visible to public.

Analysis: By including a timeline, Facebook both clarifies exactly what happened and explains why it didn’t act sooner. This is far more effective than protests that it works as fast as it can.

Is Facebook responsible for this murder or for broadcasting it?

There’s a problem with platforms like Facebook.

Facebook is not a common carrier. If someone uses the phone or Gmail to arrange a murder, you don’t blame the phone company or Google. That’s because they don’t regulate the communication on their channels and we don’t expect them to.

On the other hand, you won’t see a gratuitous video of murder on the New York Times‘ site, because the Times regulates everything that appears on its site. It even moderates the comments to remove offensive material quickly.

Facebook lives between these extremes. Anyone can post offensive words, pictures, links, and videos on Facebook. If they violate Facebook’s terms of service and if somebody reports them, Facebook will eventually notice and take them down. This process is imperfect; not only does it leave offensive things live for a while, it also occasionally censors things it shouldn’t, like a Pulitzer Prize-winning photo that included the image of a nude girl. (For an amusing aside on the absurdity of Facebook’s community standards, see New Yorker cartoon editor Bob Mankoff’s musings on “Nipplegate.”)

Even if Facebook improves the speed and machine intelligence applied to the problem, it’s not going away. Censoring Facebook would kill it. Leaving it completely uncensored would make it even more of a cesspool. You’re fooling yourself if you don’t recognize that awful posts like the Stephens video are an inherent part of Facebook that will never go away.